Why we fired our monolithic LLM (Multi‑Agent was the solution)

Alim Goulamhoussen

Publié le 20.02.26

4 min

Résumer cet article avec :

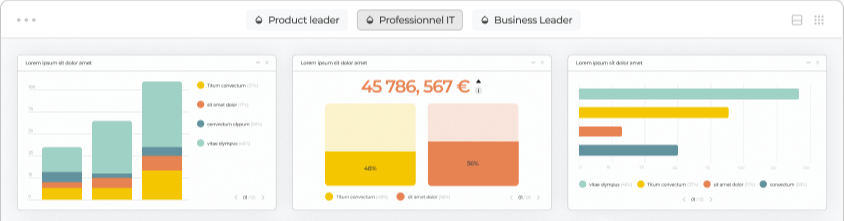

We hit a wall with the "one LLM does everything" approach. Here's what we learned and how we rebuilt our AI assistant around a team of specialists instead.

When someone asks "Show me total sales by region" or "What data do I have?", it sounds simple.

Behind the scenes, though, you need to parse intent, understand the schema, build the right query, pick a chart type, configure a visualization, and render it—all without guessing or looping. Early on, we tried handing all of that to a single model. It didn't go well.

At Toucan we've moved to a multi-agent architecture: one central orchestrator that plans and delegates to specialized agents.

It's made the system more predictable, easier to maintain, and a lot less fragile. If you're weighing similar choices—or just curious how we think about it—here's our take.

The problem with one model doing everything

We started with a single LLM that was supposed to handle the whole flow: interpret the request, explore the schema, write the query, suggest visualizations, format the answer. In theory it's elegant. In practice we ran into:

-

Inconsistent behavior – One change to fix exploration could break how we handled chart requests. Everything was tangled.

- Loops and retries – Without clear boundaries, the model would sometimes call the same failing tool again and again. Hard to debug, painful for users.

- Slow iteration – Improving one part of the experience meant touching everything. Our velocity dropped.

Splitting the work across focused agents fixed a lot of that. Each agent has a narrow job. Clear ownership. And we can improve one without fear of breaking the others.

From a CTO perspective, that's exactly what you want: a system that scales with your team, not against it.

How we structured it

We use a hierarchical setup. One orchestrator sits in the middle. Every user request hits it first. It classifies intent, creates a structured plan, and delegates to the right specialist—or handles simple stuff directly.

No sub-agent touches the user; they all report back to the orchestrator, which shapes the final response.

.jpg?width=5305&height=3015&name=Toucan%20Agents%20(1).jpg)

Simple requests—"Hi", "Thanks", "What can you do?"—never hit the specialists. The Orchestrator Agent answers directly. That keeps latency low and cost down.

The specialists

- QueryBuilder Agent – When someone wants numbers ("How many?", "Show me revenue by product"), we delegate here. It knows the schema, builds the query, executes it, and returns structured data. It classifies errors by severity—some are fatal (table not found), some are correctable (type mismatch), some need investigation—and adjusts its retry strategy accordingly. No blind loops.

- DataExplorer Agent – "What tables do I have?", "What's in my database?" – that's exploration. This agent summarizes the schema and suggests dimensions or metrics worth looking at. It's designed to give a useful first answer fast, then go deeper on follow-up.

-

Chart Agent – This one handles visualization intelligence. It operates in two modes: first, it analyzes the user's intent and the data structure to select the right chart type (bar, line, KPI, table…)—no tools needed, pure reasoning. Then, once the data is ready, it builds the chart configuration and validates it through a dedicated tool that checks both schema compliance and column existence before submission. Separating type selection from config building lets us optimize each step independently.

-

Orchestrator Agent – It's the only one that talks to users. It plans, delegates, and synthesizes. It has its own tool for structured plan management—creating, reading, and updating multi-step plans—but it never runs queries, explores schemas, or builds charts itself. That separation keeps the logic clean and makes it obvious where to fix things when they go wrong.

How agents collaborate

The interesting part isn't just that we have specialists—it's how they chain together, each agent's output feeding the next. Take a visualization request like "Show me monthly revenue by region":

1. The Orchestrator Agent classifies this as a chart request and creates a structured plan.

2. The Chart Agent analyzes the intent and selects the best chart type (here, a line chart for temporal data). It also passes hints about what the query should return.

3. The QueryBuilder Agent builds and executes the query, informed by those hints—it knows it needs a time dimension and a regional breakdown.

4. The Chart Agent takes the actual data, builds the chart configuration, validates it through its submission tool, and hands back a ready-to-render visualization.

The Orchestrator Agent ties it all together into a coherent response. Each agent does one thing well, its tools are scoped to that job, and the output of one feeds into the next. When the user explicitly asks for a specific chart type ("Give me a table of…"), we skip the type selection step entirely—fewer calls, faster results.

This same pattern scales to bigger tasks. For dashboard generation, the DataExplorer Agent first surveys the available data, then the Orchestrator Agent plans multiple visualizations and executes each one through the same chain. A full dashboard with four charts might involve a dozen coordinated steps, all managed by a single plan.

Want to try Toucan and embed an analytic assistant in your product ?

A predictable flow

Every data request goes through four phases:

classify → plan → execute → respond

Plans are structured and persistent—each step has a status, and we know exactly where we are at any moment. That makes observability and debugging much simpler.

We also enforce guards at every level: max tool calls per agent, error classification that decides whether to retry or stop, and a hard timeout on the whole request. When something fails, we stop and ask the user for clarification instead of spinning.

Principles that shaped the design

We never fabricate data. The assistant only shows results from real queries. If we don't have the data, we ask or delegate. No guessing, no synthetic numbers. That's non-negotiable for analytics.

The orchestrator plans; it doesn't execute. It coordinates. It doesn't build queries, explore schemas, or configure charts. That keeps responsibilities clear and makes it much easier to evolve each piece.

Sub-agents stay internal. They return structured results. The orchestrator turns those into coherent, human responses. One voice, consistent tone, no leaking internals. Users see real-time progress ("Analyzing your data…", "Building your chart…") but the final answer always comes from the Orchestrator Agent.

We cap complexity per request. After a few failed attempts or tool calls, we stop and re-engage the user. Errors are classified—some get a retry, some don't. It's better to ask for clarification than to loop or hallucinate.

What this gives us

-

From a build-and-ship perspective: we can add new specialists without reworking the whole system. The Orchestrator Agent gets a new routing rule, a new agent does its job, and we're done. Dashboard generation, for example, was mostly about orchestrating existing agents in a new pattern—not building from scratch.

-

From a reliability perspective: we have clear boundaries and fallbacks. When something breaks, we know which agent, which step, which phase. And we avoid the worst failure modes—runaway loops, fabricated data, infinite retries.

-

From a team perspective: engineers can own specific agents. Iteration is faster. The system behaves more predictably. That's the kind of architecture that pays off over time

About Toucan

Toucan AI is an AI-native embedded analytics chat that helps SaaS companies integrate analytics directly into their product. Thanks to a semantic layer and natural language question-to-chart capabilities, users can simply ask questions in plain language and get instant visual answers, without writing a single query.

For product teams, this means faster shipping, simpler integration, and an analytics experience that drives higher adoption, better retention, and new revenue opportunities.

Alim Goulamhoussen

Alim is Head of Marketing at Toucan and a growth marketing expert with over 8 years of experience in the SaaS industry. Specialized in digital acquisition, conversion optimization, and scalable growth strategies, he helps businesses accelerate by combining data, content, and automation. On Toucan’s blog, Alim shares practical tips and proven strategies to help product, marketing, and sales teams turn data into actionable insights with embedded analytics. His goal: make data simple, accessible, and impactful to drive business performance.

Voir tous les articles