Several months ago, we read a really interesting article from Back Market. The article describes how the platform/SRE team prepared and tested their infrastructure for Black Friday, in order to confirm that they could handle five times their usual traffic!

At Toucan Toco, we don't have to face the same traffic, but at our level, it's still important to have an idea of our own limits and know where the bottlenecks are.

Thus, we started to define a generic method to run load tests on any Toucan Toco "web" resources.

What we need

Before getting practical, it's important to define what is mandatory for us:

-

We need a solution to run at least basic load tests

> The k6 solution described in the Back Market article seems to fit: we can run load, spike, stress or soak tests... -

we need to find a load testing solution that let any dev members to create and run new tests

> The k6 tests are written in JavaScript so even the front-end devs (usually not really confident about these topics) can contribute.

-

we need to have a cheap solution

> We want a kind of pay as you use. This is why the k6 cloud approach doesn't match our current needs and basic usage.

-

we want to be able to use our current stack as much as possible and not have to invest in new tools

> k6 comes with a very well-documented docker container and we already have all the processes and tools to automatically provision a k8s cluster and deploy resources on it. So we can choose a self-hosted approach and stay in our comfort zone where we can easily manage our costs.

-

we need reports/data to compare tests and monitor the evolution

> k6 logs are really verbose, we can store them to our current ELK stack and create dashboards based on them.

We also checked other tools like Artillery or Gatling, but k6 seemed to tick all our mandatory needs. Besides, being able to write tests in JavaScript is a good perk to create adoption in the team.

So let’s dive into Toucan Toco’s first testing kit!

K6 meets our K8s

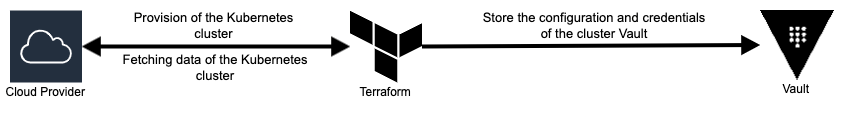

First, we need to create and provision a Kubernetes cluster. This step is done with Terraform and Vault.

Terraform creates the cluster on a given cloud provider and then writes all the necessary information to our Vault, including the kubeconfig file.

Our Kubernetes cluster comes with a pool of nodes dedicated to running the load test scenario. The specifications of these instances are quite large (48 CPU/ 256 GB RAM), with the minimum number of nodes set to zero to avoid having a node running when there is no load test to run.

Now that we have our cluster, the next step is to write and deploy the test script. K6 provides an efficient and easy way to write tests, whether you are familiar with JavaScript or not, it will take you a few minutes to complete your script in a few lines of code.

import http from 'k6/http';

export const options = {

vus: 10,

duration: '30s',

};

export default function () {

http.get('https://example.com');

}

In this example, we try to load our website as much as possible with 10 virtual users for 30 seconds. We recommend consulting the k6 documentation for more information and relevant test scenarios.

Now that we have a test scenario, let's deploy it!

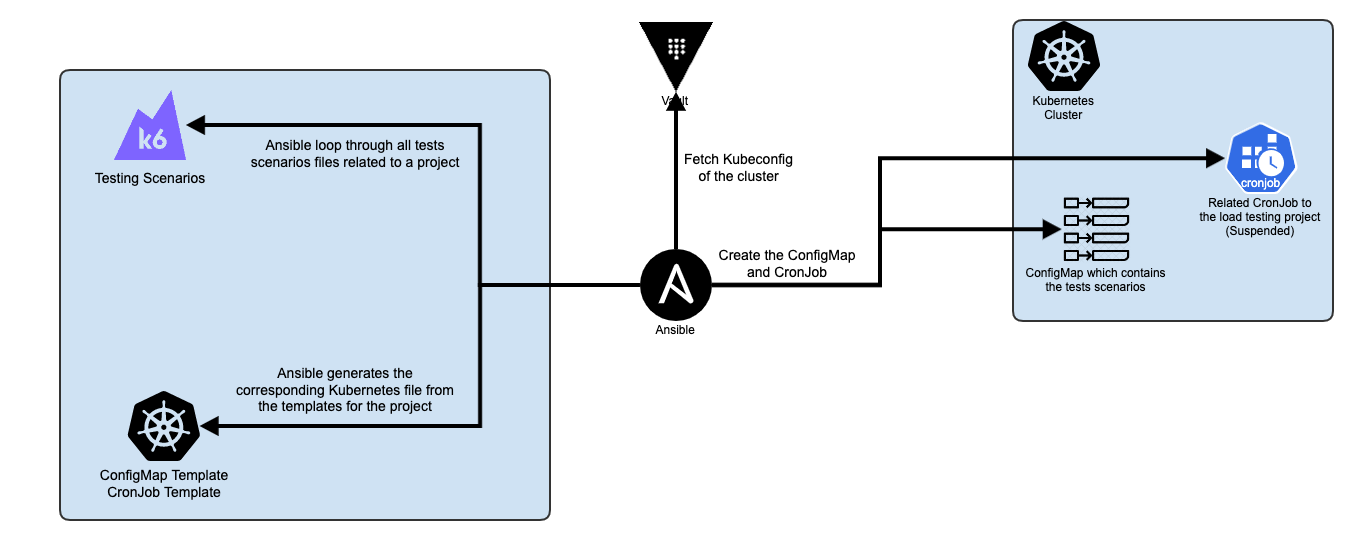

To do this, we create a pull request on our dedicated Git repository which is essentially an Ansible playbook with all our k6s crafted scenarios. This Ansible playbook will manage all the steps of the deployment.

First, the playbook will create a dedicated configuration map for the test project that contains the test scenarios, then it will create the dedicated CronJob to trigger the tests when needed. All these projects are hosted in the same namespace. CronJobs are deployed with a suspended state and are only used to trigger a load test job, so they are never executed if not manually triggered.

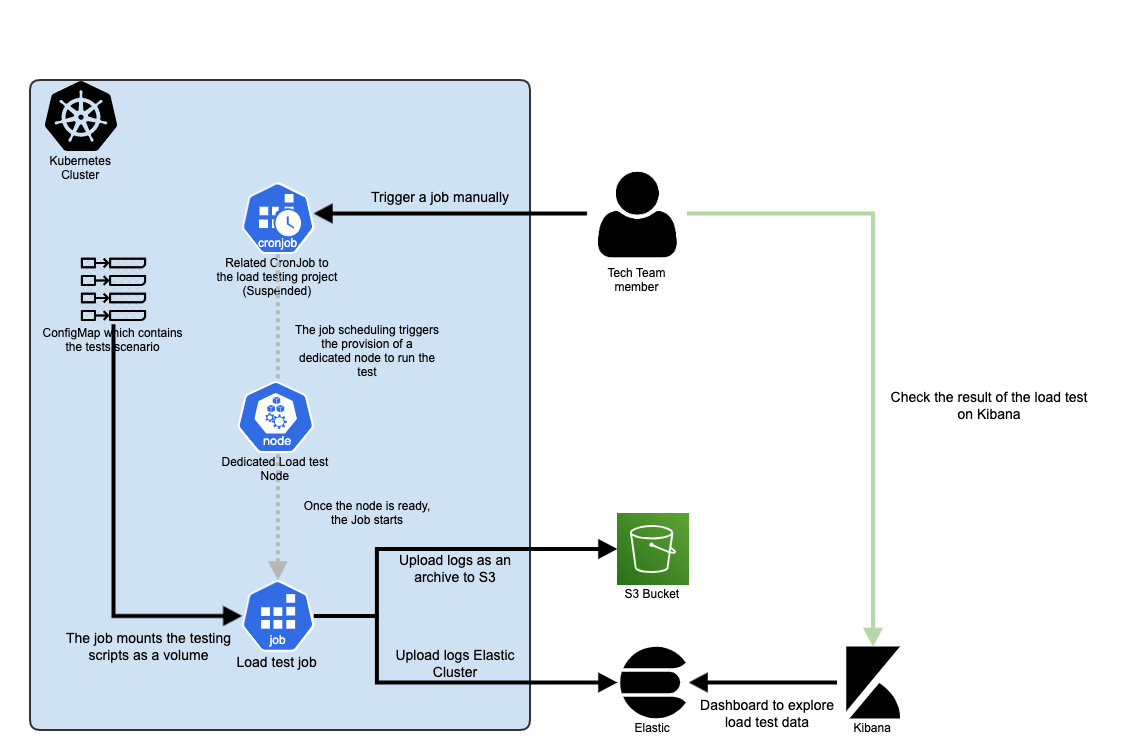

For the log management part, our cluster runs a Filebeat service that forwards all k6 tasks logs to our Elastic cluster. Because of the size of the logs, our Elastic cluster keeps the logs for 15 days only. However, in case we would want to compare a recent test with an old one, each test result is also stored in an S3 Bucket and can be easily restored, on-demand, in Elastic if needed.

When a job is triggered, the event triggers the provisioning of a dedicated node on which the job will run and at the end of the job, the node is removed from our cluster. This way, the cloud provider only charges us for the time of use.

Wrapping up

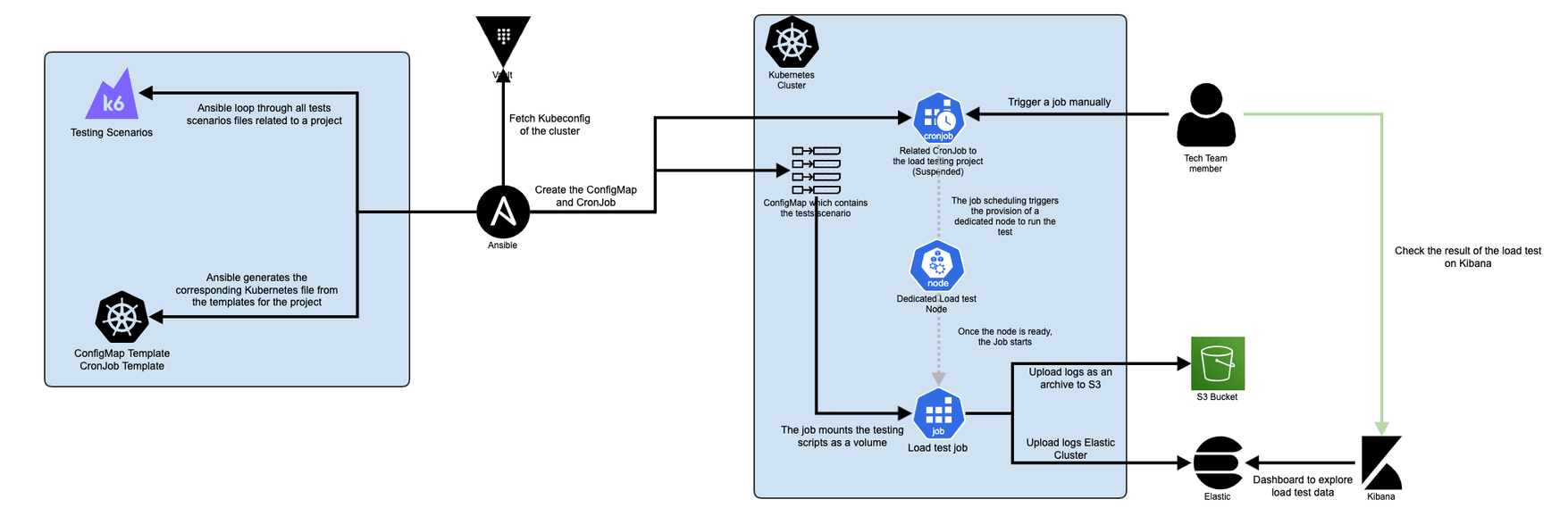

The final big picture looks like this:

After several months of usage:

-

we are pretty happy with our solution around k6

-

because we already have several parts (provisioning with Terraform, deployment with Ansible, k8s cluster, and log centralized in an ELK stacks), they were less than 10 days between the reading of the Back Market blog post and our first “production” load test

-

we ran more than 50 big load tests and it only costs 16,54 euros on our Scaleway bill! Of course, it’s only for the compute part to run the test

-

several tests have been created to load test several production services

-

thanks to this new tool and stack, we are now able to spot the weakest points of our production services and improve/fix them!

-

and we can follow the product performance evolution over the time

However, we are lucid about this solution:

-

it’s totally homemade and self-managed… and we need to maintain and keep it up to date by ourselves (which is not free !

)

) -

let’s be honest, our Kibana dashboards are cool but they are clearly less fancy than you can have with k6 cloud or Datadog