Introducing our new Multi-Tenant service to boost Data Execution speed and efficiency

.jpg?width=88&height=88&name=PORTRAIT_David_face%20(1).jpg)

David Nowinsky

Publié le 27.08.25

Mis à jour le 14.01.26

3 min

Résumer cet article avec :

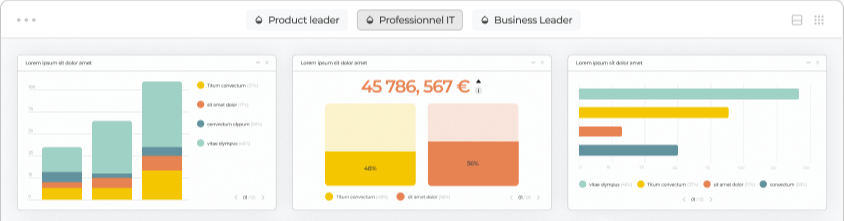

At the heart of our mission is a simple but clear goal: to make customer-facing analytics easy to deploy, highly scalable, and a delight to use. We believe that when KPIs are easy to access and understand, more people can make better decisions, faster.

However, delivering a great user experience comes with challenges, especially when it comes to performance. As data volumes grew, we found ourselves repeatedly asking: how long is too long for a dashboard to load? The truth was, we weren’t satisfied. Execution performance became a clear bottleneck, holding back the level of service we want to provide.

That is the spirit with which we've built our new Multi-Tenant service: ensure faster and more responsive dashboards for all users.

A vision guided by our past

Historically, our architecture has been shaped by our project-based origins. Each Toucan instance was initially designed to serve the specific needs of an individual client or project. Naturally, this led us to favor a highly siloed model: every client has their own dedicated instance, with a front end connected to a backend and its own database.

To keep operations simple, we chose to implement a large monolithic backend that manages all aspects of our product, a structure that has grown and accumulated features over the years as our offering expanded.

From Single-Tenant to Multi-Tenant: A Necessary Evolution

Over the years, our backend evolved into a powerful, feature-rich system, but it struggled to deliver the performance we needed for the day-to-day tasks it was supposed to handle. This backend was not redundant and suffered from recurring performance bottlenecks.

That’s why we began migrating backend logic into targeted, multi-tenant services, each with a clear and specific objective.

When it came to data, we had several key requirements in mind:

-

We needed high performance for data access.

-

We wanted a solution that would be resource-efficient for our clients.

-

We aimed for robust management of the most commonly used data providers.

-

We required full support of our transformation steps for our no code query builder (aka “YouPrep).

-

We needed a typed data exchange format to:

-

Make data exchanges more efficient,

-

Eliminate entire classes of bugs related to data typing, such as performing mathematical operations on strings or handling divisions between integers and floats.

-

-

We also wanted to keep the data hosting parity as isolated as possible

All this had to be achieved while continuing to support existing pipelines—whether they were native SQL executed in-memory within Toucan, or hybrid pipelines approach.

Introducing Our Dedicated Service: HADES

Architecture Overview

taking into account the constraints described above, we arrived at the following solution:

Components

-

Toucan App (Front-end): the dedicated customer frontend which interacts with HADES to request dataset results.

-

HADES: service, responsible for scheduling, executing, and caching data queries.

-

Dataset Service & Vault: Multi-tenant services that provide dataset configurations and securely manage connector secrets.

-

External Data Sources: Such as PostgreSQL, MySQL, Google BigQuery, or S3 buckets, which store customer datasets.

-

Customer-dedicated S3 Buckets: Used to cache live query results, ensuring data isolation between tenants.

How HADES works

- Query Initiation

When a user interacts with their Toucan App, the front-end sends a query to HADES, requesting dataset results by a unique identifier (UID). - Dataset Configuration & Secrets

HADES needs to know how to access the requested dataset. It queries the Dataset Service for the full dataset configuration. If connector secrets are required (for example, database credentials), the Dataset Service retrieves them from the Vault—a secure, multi-tenant secrets manager. - Scheduling & Executing Live Queries

With configuration and secrets in hand, HADES schedules a live query job via its worker process. This job fetches live data from the appropriate external source (e.g., PostgreSQL, MySQL, BigQuery, or S3). - Caching for Performance

To optimize performance and reduce redundant queries, HADES caches the results of live queries in a customer-dedicated S3 bucket. Each dataset is stored as an Apache Arrow file, enabling efficient storage and retrieval. - Serving Results

When the front-end requests results, HADES’ API process checks the cache first. If cached data is available, it is served directly from S3. Otherwise, a new live query job is scheduled.

Security & Multi-Tenancy

-

Multi-Tenant Isolation: Each customer’s data is isolated at every layer—front-end, S3 bucket, and dataset configuration.

-

Kubernetes Authentication: All inter-service authentication (between HADES, Dataset Service, and Vault) is handled using robust Kubernetes (K8S) authentication mechanisms, ensuring secure, identity-based access across services.

Better than ever

The new service brings major optimizations for data-intensive dashboards and homes when fueled with live data queries

On the same dashboard the Largest Contentful Paint (LCP):

-

Before: 7.10 seconds, resulting in noticeable delays for users waiting for the main content to load.

-

Now: Reduced to just 1.59 seconds, ensuring critical information appears much faster.

The same dashboard but powered with our Data Execution service

We are committed to performance and these improvements reflect our dedication to providing a high-performance, data-driven dashboard that meets the needs of modern product teams.

.jpg?width=112&height=112&name=PORTRAIT_David_face%20(1).jpg)

David Nowinsky

David is the Chief Technology Officer (CTO) at Toucan, the embedded analytics platform built for SaaS companies that want to deliver seamless, impactful data experiences to their users. With deep expertise in software engineering, data architecture, and product innovation, David leads the technical vision behind Toucan’s mission to make analytics simple, accessible, and scalable. On Toucan’s blog, David shares insights on embedded analytics best practices, technical deep-dives, and the latest trends shaping the future of SaaS product development. His articles help technical teams build better products, faster—without compromising on user experience.

Voir tous les articles